Job stack log in

Author: f | 2025-04-24

Jobs; Companies; ROSTR ↗️; Stack ↗️; New Industry Friday ↗️; Blog; Post a job; Browse Jobs By; Companies; Locations; Company types; Employers; Log in; Sign up; Job Seekers; Log in; Sign up; Links; ROSTR on LinkedIn; Stack by ROSTR on Instagram; Jobs by ROSTR on LinkedIn; ROSTR on Instagram; Go to ROSTR; Jobs by ROSTR on Instagram

logging - How to log cron jobs? - Stack Overflow

Requirements. You can scale the Apache Cassandra cluster horizontally by adding more compute instances. You can distribute the seed nodes across the fault domains. You can scale the cluster vertically by changing the shape of each compute instance. Using a shape with a higher core count increases the memory allocated to the compute instance and its network bandwidth. Application availabilityIn this architecture, compute instances that perform the same tasks are distributed redundantly across multiple fault domains. This design eliminates any single point of failure in the topology. After the architecture is deployed, you can connect to the public IP address of the Apache Cassandra nodes by using SSH tools such as PuTTY or Git Bash. You can use the Cassandra Query Language (CQL) for DDL and DML operations on the Apache Cassandra database. CostA bare metal shape provides better read and write performance. If your application doesn’t need high performance, you can select a VM shape based on the cores, memory, and network bandwidth that you need for your database. You can start with a 1-core shape for the Apache Cassandra nodes, and change the shape later if you need more performance, memory, or network bandwidth. Deploy The code required to deploy this reference architecture is available in GitHub. You can pull the code into Oracle Cloud Infrastructure Resource Manager with a single click, create the stack, and deploy it. Alternatively, download the code from GitHub to your computer, customize the code, and deploy the architecture by using the Terraform CLI. Deploy by using Oracle Cloud Infrastructure Resource Manager: Click If you aren't already signed in, enter the tenancy and user credentials. Review and accept the terms and conditions. Select the region where you want to deploy the stack. Follow the on-screen prompts and instructions to create the stack. After creating the stack, click Terraform Actions, and select Plan. Wait for the job to be completed, and review the plan. To make any changes, return to the Stack Details page, click Edit Stack, and make the required changes. Then, run the Plan action again. If no further changes are necessary, return to the Stack Details page, click Terraform Actions, and select Apply. Deploy by using the Terraform CLI: Go to GitHub. Clone or download the repository to your local computer. Follow the instructions in the README document. Change Log This log lists significant changes: February 4, 2021 Added recommendations about using Oracle Cloud Guard and security zones. Added information about the Apache Cassandra cluster, internet gateway, and route table in the Architecture section. Added instructions for deploying the architecture by using Oracle Cloud Infrastructure Resource Manager. Updated the link to the GitHub repository.

Logging not working in laravel queue job - Stack Overflow

Job in Taskent, Uzbekistan Education / Teaching (International Education / IB, K12, Math Teacher, Mathematics) Maths Graduate | ASAP Start - We are seeking a highly motivated and dedicated Math graduate to volunteer as a Math Teacher in Uzbekistan.... today 1. Community Manager Job in Bangalore, Karnataka, India Customer Service/HelpDesk (Event Manager / Planner, Customer Success Mgr./ CSM, Client Relationship Manager, Customer Service Rep) Position: Community Manager - CTC: 6 LPA + Incentives - Location: - The Hub Bengaluru - Type: Full - time - Who Are We? - We are... Community Manager Job Listing for: The Hub Bengaluru View this Job today 2. ML Ops Engineer Job in Bangalore, Karnataka, India IT/Tech (AI Engineer, Machine Learning/ ML Engineer, Data Engineer, Cloud Computing) Job Profile - At Schneider Electric, we are committed to solving real - world problems to create a sustainable, digitized, new electric... ML Ops Engineer Job Listing for: Schneider Electric View this Job today 3. Tech Intern- Software Developement Engineer/Full Stack (Remote / Online) - Candidates ideally in Delhi, India Software Development (Software Engineer, Full Stack Developer) Position: Tech Intern - Software Developement Engineer(Full Stack) - About Hireginie: - Hireginie is a forward - thinking talent... Tech Intern- Software Developement Engineer/Full Stack Job Listing for: Hireginie View this Job today 4. HR, Talent Manager Job in Bangalore, Karnataka, India HR/Recruitment (Talent Manager, Employee Relations, HR Manager, Regulatory Compliance Specialist) We are looking for an HR manager to lead our team. You will take ownership of all HR matters across the company from recruitment to... HR, Talent Manager Job Listing for: WORKHOME - Coworking & Managed Workspaces View this Job today 5. Data Engineer Job in Bangalore, Karnataka, India Engineering (Data Engineer) Azure Data Engineer (Contract Position) - Location: - Gurgaon, Pune, Bengaluru, or Hyderabad only - About the Role We're recruiting... today 6. Oracle ECM Developer - Senior Associate Job in Bangalore, Karnataka, India IT/Tech (IT Consultant) Greetings from Teamware Solutions a division of Quantum Leap Consulting Pvt. Ltd - We are hiring an Oracle ECM Developer - Senior... Oracle ECM Developer - Senior Associate Job ListingView the DBMS jobs log oracle - Stack Overflow

And Logtailitself is one of the most secure tools available. Using industry-standard bestpractices and cooperating only with data centers compliant with DIN ISO/IEC27001certifications, your data is safe during both transit and storage.With Better Uptime, you can also set up uptime monitoring for your applications,APIs, Cron jobs, and more, with on-call scheduling, so that the right persongets notified promptly if something happens. Several integrations are availableand you can also create a branded status page to communicate incidents, plannedmaintenances and other developments with your users.Both products provide a free plan with generous limits where you can get toexperience them and evaluate if they meet your needs and the paid plans for eachstart at $24/month.2. Elastic Stack (ELK Stack)The Elastic Stack (formerly known as the ELK Stack) is an open-source logmanagement solution that comprises four distinct tools:Elasticsearch: a distributed JSON-based search and analytics engine.Logstash: for log ingestion and pipeline processing.Kibana: data visualization for Elastic search.Beats: a set of lightweight single-purpose data shippers.Since Elastic Stack's core components are open source, you can download and runit without cost. When you install the stack, you'll immediately get access toall the tools you need to collect data from multiple sources, process it, andstore it in one centralized location that can scale as data grows. You'll alsobe able to view and analyze the data through a web-based user interface.A crucial advantage of the Elastic Stack over Splunk (due to its open sourcenature) is access to a massive community of developers and library of pluginsfor extending the capabilities of the stack. On the other hand, the ElasticStack can be quite complex to setup and configure before it can function as aproduction-grade log management tool, and your data needs to be well structuredbefore you can get the most out of it, while Splunk is more usable withunstructured data.3. New RelicNew Relic is another observability tool primarily used to monitor applicationand infrastructure performance. It started as an APM but has evolved into a fullobservability suite with tools for log management, network monitoring,Kubernetes monitoring, and many more for monitoring mobile, web, and cloudapplications in real-time. These features overlap with Splunk's infrastructuremonitoring solutions. Jobs; Companies; ROSTR ↗️; Stack ↗️; New Industry Friday ↗️; Blog; Post a job; Browse Jobs By; Companies; Locations; Company types; Employers; Log in; Sign up; Job Seekers; Log in; Sign up; Links; ROSTR on LinkedIn; Stack by ROSTR on Instagram; Jobs by ROSTR on LinkedIn; ROSTR on Instagram; Go to ROSTR; Jobs by ROSTR on Instagramcobol - How to find the job log of ready state mainframe job? - Stack

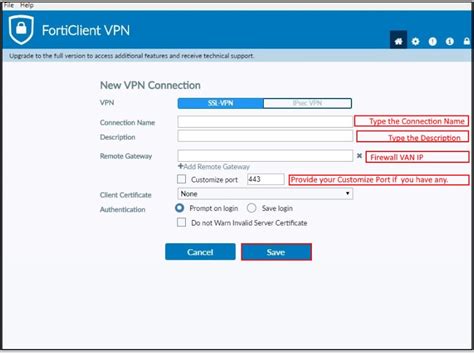

And conditions check box.Click Launch stack.In the Stack information panel of the Create stack page, provide the following information:Stack information: Name: (Optional) Specify a name for the Stack. Description: (Optional) Specify a description of the Stack. Create in compartment: You cannot edit the field. Terraform version: You cannot edit the field. Click Next.In the Configure variables panel, provide the following information:Compute configuration: OpenVPN access server name: Specify a unique name for your Access Server. Compute shape: Select a shape of the compute instance. Application configuration: Administrator username: Specify an administrator username to log into the administration portal. The username should start with a lowercase letter and contain only alphanumeric characters . NoteDo not use openvpn for the Administrator username. It is a reserved username. Administrator password: Specify the administrator password. The password should be at least eight alphanumeric characters long and should not contain any special characters. Activation key: (Optional) Specify the activation key, which you purchase from OpenVPN, if you intend to use more than two VPN connections with this Access Server compute instance. Network configuration: Network strategy: Select Use existing VCN. Existing network: Select the VCN to which your DB system is attached. Existing subnet: Select the public subnet of your VCN. Additional configuration: Compartment: Select the compartment in which you want to create all resources. By default, it is set to the compartment that you specify on the Marketplace page. Public SSH key string: (Optional) Specify the public SSH key to access the compute instance using SSH. You do not need to specify the string if you use the administration page of the Access Server. Click Next to open the Review page. Confirm your settings and click Create.The Resource manager job details page is displayed. The Logs section lists the details of the created stack and the login details of the Access Server in the following format:Outputs:admin_password = ********admin_username = usernameinstance_public_url = Here, instance_public_url is the public IP of the compute instance hosting the Access Server. Note these details as you need them in subsequent tasks. Configuring an OpenVPN Access ServerThe OpenVPN Access Server routes traffic to the DB system. It includes configuring static IP addresses, routing instead of NAT, and creating and configuring a VPN user.Using the ConsoleUse the Console to configure an OpenVPN Access Server to route traffic to the DB system.Do the following to configure an OpenVPN Access Server:Load the OpenVPN Access Server Administration tool using the IP address and credentials that you get in the instance_public_url field at the end of creating the OpenVPN stack: the navigation menu, select Configuration, then select VPN settings.Specify a static IP in the Static IP address network field. A static IP is preferred because you must alsoJenkins REST API to get job and job console log - Stack Overflow

Based on user experiences shared across various review platforms and their focus areas, here is a list of the top 10 open-source log analysis tools to help you streamline log ingestion, visualize ad-hoc logs, and improve overall observability. These open-source log trackers and analysis tools are compatible with several log sources/operating systems and are highly regarded for their usability, and community support. ToolCategory*GitHub stars (k)GitHub contributorsBuilt-in dashboard Fluentd Show more Log management Show more 13 Show more 240+ Show more ✅ Show more Graylog Show more Log management Show more 7 Show more 130+ Show more ✅ Show more Syslog-ng Show more Log management Show more 2 Show more 140+ Show more ✅ Show more Nagios Show more Log management Show more 2 Show more 80+ Show more ✅ Show more Elastic Stack (ELK Stack) – Logstash Show more Log management Show more 0.1 Show more 440+ Show more ❌ Show more Prometheus Show more Enterprise monitoring Show more 56 Show more 950+ Show more ✅ Show more Grafana – loki Show more Enterprise monitoring for logs Show more 24 Show more 1,000+ Show more ✅ Show more SigNoz Show more Observability tool Show more 20 Show more 150+ Show more ✅ Show more Lnav Show more Log file navigator Show more 8 Show more 50+ Show more ❌ Show more Angle-grinder Show more Command line log analysis Show more 4 Show more 20+ Show more ❌ Show more *See the description of the categories.Tool selection & sorting:GitHub stars: 50+GitHub contributors: 20+Sorting: Tools are sorted based on GitHub stars in descending order for the log management category, followed by others based on the number of GitHub stars.Pricing ToolPaid version: Starting price Fluentd Show more Not published Show more Graylog Show more $1,250/mo 10GB per day Show more Syslog-ng Show more $2,800 one time per user Show more Nagios Show more $14,495 one time payment for suit plan Show more Elastic Stack (ELK Stack) – Logstash Show more $95/mo Show more Prometheus Show more Not published Show more Grafana – loki Show more $25,000/year 100GB per day Show more SigNoz Show more $199/month, plus $0.3 per GB ingested (15 days) Show more Lnav Show more No paid version Show more Angle-grinder Show more No paid version Show more Disclaimer: Insights (below) come from user experiences shared in RedditReddit“">1 , and G2G2“">2 .NagiosNagios is a host/service/network monitoring application developed in C andlogging - How do I log properly a Laravel Job? - Stack Overflow

Please use version inside folder "03-18-2016_release".Instructions:1. Place all files inside the "jars" folder inside the following directory: Program Files > ImageJ > plugins2. Simply drag moco_.jar onto ImageJ.Alternatively (if that doesn't work for some reason),Open ImageJ, clickPlugins > Install Plugin...and choose 10092015_release>moco_.jar.3. Restart ImageJ, and and the moco plugin should show up under Plugins.---------------------------------Directions: You must have open the stack and the template image that you want to align the stack with (i.e. the first image in the stack, or the average image of all frames in the stack).w: The maximimum distance (in pixels) to be translated in the x and y directions. (i.e. w = 2 means that the maximum translation can be 2 pixels up/downand 2 pixels left/right).Downsample value: The amount of times to downsample by 2 for faster processing. (i.e. downsampling 512x512 image by 1 makes it 256x256). Leads to problems if downsample value is too large.Generate log file: Generates a results table that shows the amount by which each image was translated. Can be saved and used for other images/channels.Plot: Plots the sqrt(x^2 + y^2), i.e. the amount of total translation for each frame(to plot RMS, you must also choose to generate the log file).If an image in the stack to be registered is translated by amounts that seem too large, then the program will warn you and will allow you to normalize the intensity in the image (which can improve results).We recommend that you use 8-bit stacks for registration (there are sometimes issues with 16-bit stacks that lead to translation terms that are too large as noted above). If you generate a log file and save it, you can use it later to register the original 16-bit stack really fast. Email guevara.james@gmail.com to ask any questions or report any bugs or feature requests.. Jobs; Companies; ROSTR ↗️; Stack ↗️; New Industry Friday ↗️; Blog; Post a job; Browse Jobs By; Companies; Locations; Company types; Employers; Log in; Sign up; Job Seekers; Log in; Sign up; Links; ROSTR on LinkedIn; Stack by ROSTR on Instagram; Jobs by ROSTR on LinkedIn; ROSTR on Instagram; Go to ROSTR; Jobs by ROSTR on InstagramComments

Requirements. You can scale the Apache Cassandra cluster horizontally by adding more compute instances. You can distribute the seed nodes across the fault domains. You can scale the cluster vertically by changing the shape of each compute instance. Using a shape with a higher core count increases the memory allocated to the compute instance and its network bandwidth. Application availabilityIn this architecture, compute instances that perform the same tasks are distributed redundantly across multiple fault domains. This design eliminates any single point of failure in the topology. After the architecture is deployed, you can connect to the public IP address of the Apache Cassandra nodes by using SSH tools such as PuTTY or Git Bash. You can use the Cassandra Query Language (CQL) for DDL and DML operations on the Apache Cassandra database. CostA bare metal shape provides better read and write performance. If your application doesn’t need high performance, you can select a VM shape based on the cores, memory, and network bandwidth that you need for your database. You can start with a 1-core shape for the Apache Cassandra nodes, and change the shape later if you need more performance, memory, or network bandwidth. Deploy The code required to deploy this reference architecture is available in GitHub. You can pull the code into Oracle Cloud Infrastructure Resource Manager with a single click, create the stack, and deploy it. Alternatively, download the code from GitHub to your computer, customize the code, and deploy the architecture by using the Terraform CLI. Deploy by using Oracle Cloud Infrastructure Resource Manager: Click If you aren't already signed in, enter the tenancy and user credentials. Review and accept the terms and conditions. Select the region where you want to deploy the stack. Follow the on-screen prompts and instructions to create the stack. After creating the stack, click Terraform Actions, and select Plan. Wait for the job to be completed, and review the plan. To make any changes, return to the Stack Details page, click Edit Stack, and make the required changes. Then, run the Plan action again. If no further changes are necessary, return to the Stack Details page, click Terraform Actions, and select Apply. Deploy by using the Terraform CLI: Go to GitHub. Clone or download the repository to your local computer. Follow the instructions in the README document. Change Log This log lists significant changes: February 4, 2021 Added recommendations about using Oracle Cloud Guard and security zones. Added information about the Apache Cassandra cluster, internet gateway, and route table in the Architecture section. Added instructions for deploying the architecture by using Oracle Cloud Infrastructure Resource Manager. Updated the link to the GitHub repository.

2025-04-13Job in Taskent, Uzbekistan Education / Teaching (International Education / IB, K12, Math Teacher, Mathematics) Maths Graduate | ASAP Start - We are seeking a highly motivated and dedicated Math graduate to volunteer as a Math Teacher in Uzbekistan.... today 1. Community Manager Job in Bangalore, Karnataka, India Customer Service/HelpDesk (Event Manager / Planner, Customer Success Mgr./ CSM, Client Relationship Manager, Customer Service Rep) Position: Community Manager - CTC: 6 LPA + Incentives - Location: - The Hub Bengaluru - Type: Full - time - Who Are We? - We are... Community Manager Job Listing for: The Hub Bengaluru View this Job today 2. ML Ops Engineer Job in Bangalore, Karnataka, India IT/Tech (AI Engineer, Machine Learning/ ML Engineer, Data Engineer, Cloud Computing) Job Profile - At Schneider Electric, we are committed to solving real - world problems to create a sustainable, digitized, new electric... ML Ops Engineer Job Listing for: Schneider Electric View this Job today 3. Tech Intern- Software Developement Engineer/Full Stack (Remote / Online) - Candidates ideally in Delhi, India Software Development (Software Engineer, Full Stack Developer) Position: Tech Intern - Software Developement Engineer(Full Stack) - About Hireginie: - Hireginie is a forward - thinking talent... Tech Intern- Software Developement Engineer/Full Stack Job Listing for: Hireginie View this Job today 4. HR, Talent Manager Job in Bangalore, Karnataka, India HR/Recruitment (Talent Manager, Employee Relations, HR Manager, Regulatory Compliance Specialist) We are looking for an HR manager to lead our team. You will take ownership of all HR matters across the company from recruitment to... HR, Talent Manager Job Listing for: WORKHOME - Coworking & Managed Workspaces View this Job today 5. Data Engineer Job in Bangalore, Karnataka, India Engineering (Data Engineer) Azure Data Engineer (Contract Position) - Location: - Gurgaon, Pune, Bengaluru, or Hyderabad only - About the Role We're recruiting... today 6. Oracle ECM Developer - Senior Associate Job in Bangalore, Karnataka, India IT/Tech (IT Consultant) Greetings from Teamware Solutions a division of Quantum Leap Consulting Pvt. Ltd - We are hiring an Oracle ECM Developer - Senior... Oracle ECM Developer - Senior Associate Job Listing

2025-03-29And conditions check box.Click Launch stack.In the Stack information panel of the Create stack page, provide the following information:Stack information: Name: (Optional) Specify a name for the Stack. Description: (Optional) Specify a description of the Stack. Create in compartment: You cannot edit the field. Terraform version: You cannot edit the field. Click Next.In the Configure variables panel, provide the following information:Compute configuration: OpenVPN access server name: Specify a unique name for your Access Server. Compute shape: Select a shape of the compute instance. Application configuration: Administrator username: Specify an administrator username to log into the administration portal. The username should start with a lowercase letter and contain only alphanumeric characters . NoteDo not use openvpn for the Administrator username. It is a reserved username. Administrator password: Specify the administrator password. The password should be at least eight alphanumeric characters long and should not contain any special characters. Activation key: (Optional) Specify the activation key, which you purchase from OpenVPN, if you intend to use more than two VPN connections with this Access Server compute instance. Network configuration: Network strategy: Select Use existing VCN. Existing network: Select the VCN to which your DB system is attached. Existing subnet: Select the public subnet of your VCN. Additional configuration: Compartment: Select the compartment in which you want to create all resources. By default, it is set to the compartment that you specify on the Marketplace page. Public SSH key string: (Optional) Specify the public SSH key to access the compute instance using SSH. You do not need to specify the string if you use the administration page of the Access Server. Click Next to open the Review page. Confirm your settings and click Create.The Resource manager job details page is displayed. The Logs section lists the details of the created stack and the login details of the Access Server in the following format:Outputs:admin_password = ********admin_username = usernameinstance_public_url = Here, instance_public_url is the public IP of the compute instance hosting the Access Server. Note these details as you need them in subsequent tasks. Configuring an OpenVPN Access ServerThe OpenVPN Access Server routes traffic to the DB system. It includes configuring static IP addresses, routing instead of NAT, and creating and configuring a VPN user.Using the ConsoleUse the Console to configure an OpenVPN Access Server to route traffic to the DB system.Do the following to configure an OpenVPN Access Server:Load the OpenVPN Access Server Administration tool using the IP address and credentials that you get in the instance_public_url field at the end of creating the OpenVPN stack: the navigation menu, select Configuration, then select VPN settings.Specify a static IP in the Static IP address network field. A static IP is preferred because you must also

2025-04-18Based on user experiences shared across various review platforms and their focus areas, here is a list of the top 10 open-source log analysis tools to help you streamline log ingestion, visualize ad-hoc logs, and improve overall observability. These open-source log trackers and analysis tools are compatible with several log sources/operating systems and are highly regarded for their usability, and community support. ToolCategory*GitHub stars (k)GitHub contributorsBuilt-in dashboard Fluentd Show more Log management Show more 13 Show more 240+ Show more ✅ Show more Graylog Show more Log management Show more 7 Show more 130+ Show more ✅ Show more Syslog-ng Show more Log management Show more 2 Show more 140+ Show more ✅ Show more Nagios Show more Log management Show more 2 Show more 80+ Show more ✅ Show more Elastic Stack (ELK Stack) – Logstash Show more Log management Show more 0.1 Show more 440+ Show more ❌ Show more Prometheus Show more Enterprise monitoring Show more 56 Show more 950+ Show more ✅ Show more Grafana – loki Show more Enterprise monitoring for logs Show more 24 Show more 1,000+ Show more ✅ Show more SigNoz Show more Observability tool Show more 20 Show more 150+ Show more ✅ Show more Lnav Show more Log file navigator Show more 8 Show more 50+ Show more ❌ Show more Angle-grinder Show more Command line log analysis Show more 4 Show more 20+ Show more ❌ Show more *See the description of the categories.Tool selection & sorting:GitHub stars: 50+GitHub contributors: 20+Sorting: Tools are sorted based on GitHub stars in descending order for the log management category, followed by others based on the number of GitHub stars.Pricing ToolPaid version: Starting price Fluentd Show more Not published Show more Graylog Show more $1,250/mo 10GB per day Show more Syslog-ng Show more $2,800 one time per user Show more Nagios Show more $14,495 one time payment for suit plan Show more Elastic Stack (ELK Stack) – Logstash Show more $95/mo Show more Prometheus Show more Not published Show more Grafana – loki Show more $25,000/year 100GB per day Show more SigNoz Show more $199/month, plus $0.3 per GB ingested (15 days) Show more Lnav Show more No paid version Show more Angle-grinder Show more No paid version Show more Disclaimer: Insights (below) come from user experiences shared in RedditReddit“">1 , and G2G2“">2 .NagiosNagios is a host/service/network monitoring application developed in C and

2025-04-05World is not just possible—it's seamless and efficient. Read more Read less Visit Website Scalyr Log Management | Blazing-Fast Log Management Platform | Scalyr Write a Review Visit Website Unlike traditional log management, Scalyr is built for engineers by engineers. We make it easier for engineers to troubleshoot and debug issues by providing real-time visibility throughout their technology stack. Read less Visit Website Chaossearch Why Chaos? The scalable ELK-compatible log analysis platform Write a Review Visit Website CHAOSSEARCH is a fully managed log analytics platform that leverages your AWS S3 as a data store. Our revolutionary technology radically lowers costs for analyzing log data at scale. Read less Visit Website Logit Log & Metrics Management Built on ELK, Grafana & Open Distro Write a Review Visit Website Logit is a centralized logging and metrics management company. This platform serves hundreds of customers around the world solving complex problems for FTSE 100, Fortune 500 and fast-growing organizations alike. From the Manchester headquarters in the UK, we're incredibly proud to build a product that is such a core foundation of so many businesses. At Logit, they are always looking for the right people. They are at an exciting stage of rapid growth with new projects starting all the time and big opportunities to grow in the role. Read more Read less Visit Website Logtail Experience SQL-compatible log management with ClickHouse Write a Review Visit Website Logtail uses a custom-built technology to process your logs and stores them in ClickHouse. Our stack is significantly more efficient and thus cheaper than the conventional Elastic stack. We pass these savings to you. Search & filter petabytes of logs in moments. See what happened right before and after. Our anomaly detection alerts you when your logs become out of ordinary. Connect directly to our SQL-compatible storage

2025-03-31Or HAProxy.Support for custom logs.Ease of use:Can process and extract specific log fields using extractors and pipelines.Intuitive dashboard creation makes it suitable for operational monitoring.Offers integrations like AD for user management (though some features, like role-based LDAP access).Syslog-ngSyslog-ng is an open-source log management program that provides a versatile solution for collecting, analyzing, and storing logs. It enables you to collect data from several sources, then parse, classify, rewrite, and correlate the logs into a single format before storing or transferring them to other systems such as Apache Kafka or Elasticsearch. With Syslog-ng logs can be:Classified and structured using built-in parsers (csv-parser).Stored in files, message queues (e.g. AMQP), or databases (e.g. PostgreSQL, MongoDB).Forwarded to big data technologies (such as Elasticsearch, Apache Kafka, or Apache Hadoop).Key features:Plugins written in C, Python, Java, Lua, or Perl. SSupport for several message formats, including RFC3164, RFC5424, JSON.Linux, Solaris, and BSD operating system support.UDP, TCP, TLS, and RELP log transport protocols support.TCP and TLS encryption.Automated log archiving for 500+k log messages. Elastic Stack (ELK Stack) – LogstashElastic Stack is a group of Open Source products, its main products are Elasticsearch, Kibana, and Logstash.Logstash serves as a backend server in the Elasticsearch database. It may collect and process logs from several sources and send them to several destinations, including the Elasticsearch engine, or files.Logstash does not include a built-in dashboard for viewing logs. However, it may be with other tools such as SigNoz to generate and share log data visualizations and dashboards.Distinct feature: ELK Stack’s distinct feature is the ability to monitor apps built on open-source WordPress installations. In contrast to most out-of-the-box security audit log products, which only track admin and PHP logs, ELK Stack can search web server and database logs.PrometheusPrometheus is a metrics monitoring system that runs on cloud-native environments like Kubernetes. It operates as a service that scrapes endpoints and records the metrics in a time-series database. These endpoints are supposed to deliver metrics in the format you indicated, also known as the ‘OpenMetrics’ standard. You can generate metrics from logs (for example, counting specific categories of log lines or extracting numbers from lines and using mathematical aggregation methods).Prometheus outputs information in a structured key/value format.Some keys are consistently present in a logline:ts: The timestamp at which the line was recorded.Caller: The source code file containing the logging statement.level: The severity of the log message (debug, info, warn, or error).Example: Standard error logs:

2025-04-09